本帖最后由 云天 于 2024-12-22 20:24 编辑

【项目背景】 随着智能手机和物联网技术的快速发展,手势控制作为一种新颖的交互方式,正在逐渐渗透到我们的日常生活中。华为手机的隔空手势截屏功能,就是一个很好的例子,它通过识别用户的手势来实现屏幕截图,不仅提高了用户体验,还展示了手势控制技术在移动设备上的应用潜力。 【项目设计】 本项目旨在通过两个行空板实现类似的手势控制屏幕截图功能。我们将利用OpenCV库来采集手势图像,这是一个开源的计算机视觉和机器学习软件库,它提供了多种图像和视频处理功能,非常适合用于实时手势识别。同时,我们将使用Mediapipe库来识别手势,Mediapipe是一个由Google开发的跨平台框架,它集成了多种先进的机器学习模型,包括手势识别,能够实时准确地识别手部关键点和手势。 项目的核心在于通过物联网技术,实现两个行空板之间的图像传输。当行空板1通过摄像头采集到的手势被识别为拳头时,它将触发屏幕截图功能,并将截图通过物联网发送给行空板2。而行空板2在识别到张开的手掌手势时,将接收并显示通过物联网传输过来的图片。 这个项目不仅展示了手势识别技术在物联网领域的应用,还体现了如何通过智能硬件和软件的结合,实现更加直观和便捷的用户交互体验。通过这种方式,我们可以探索手势控制在智能家居、工业自动化、游戏娱乐等多个领域的应用潜力,推动智能交互技术的发展。 【硬件设计】

摄像头通过USB接口连接行空板,供电使用充电宝。

【项目准备】

1.行空板安装 pyautogui,终端安装:sudo apt-get install scrot

2.行空板安装MediaPipe库,安装方法:https://www.unihiker.com.cn/wiki ... 3%E4%B8%8B%E8%BD%BD

【程序编写】

1.测试行空板画图,发送屏幕截图至siot物联网平台。

2.增加手势(握拳)识别

- import cv2

- import mediapipe as mp

- import math

- import sys

- import siot

- import time

-

- import pyautogui

- import numpy as np

- from unihiker import GUI

- import base64

- from io import BytesIO

- from PIL import Image

- from unihiker import Audio

-

- sys.path.append("/root/mindplus/.lib/thirdExtension/nick-base64-thirdex")

- def frame2base64(frame):

- frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

- img = Image.fromarray(frame) #将每一帧转为Image

- output_buffer = BytesIO() #创建一个BytesIO

- img.save(output_buffer, format='JPEG') #写入output_buffer

- byte_data = output_buffer.getvalue() #在内存中读取

- base64_data = base64.b64encode(byte_data) #转为BASE64

- return base64_data #转码成功 返回base64编码

-

- def base642base64(frame):

- data=str('data:image/png;base64,')

- base64data = str(frame2base64(frame))

- framedata = base64data[2:(len(base64data)-1)]

- base642base64_data = data + str(framedata)

- return base642base64_data

-

- # 事件回调函数

- def mouse_move(x,y):

- global HuaDongJianGe

- global startX, startY,endX, endY,bs,img

- global 画图

- print((str(startX) + str((str(":") + str((str(startY) + str((str(" ") + str((str(endX) + str((str(":") + str(endY)))))))))))))

- if ((time.time() - HuaDongJianGe) > 0.5):

- bs = 0

- HuaDongJianGe = time.time()

- if (bs == 0):

- startX = x

- startY = y

- endX = x

- endY = y

- bs = 1

- 画点=u_gui.fill_circle(x=x,y=y,r=2,color="#0000FF")

- 画图=u_gui.draw_line(x0=startX,y0=startY,x1=endX,y1=endY,width=5,color="#0000FF")

- else:

- startX = endX

- startY = endY

- endX = x

- endY = y

- 画点=u_gui.fill_circle(x=x,y=y,r=2,color="#0000FF")

- 画图=u_gui.draw_line(x0=startX,y0=startY,x1=endX,y1=endY,width=5,color="#0000FF")

- def qingkong():

- u_gui.clear()

- def jietu():

- screenshot = pyautogui.screenshot()

- screenshot.save('screenshot.png')

- img = cv2.imread("screenshot.png", cv2.IMREAD_UNCHANGED)

-

- siot.publish(topic="df/pic", data=str(base642base64(img)))

-

- mp_hands = mp.solutions.hands

- hands = mp_hands.Hands(static_image_mode=False, max_num_hands=1, min_detection_confidence=0.5, min_tracking_confidence=0.5)

- mp_drawing = mp.solutions.drawing_utils

- u_audio = Audio()

- u_gui=GUI()

- u_gui.on_mouse_move(mouse_move)

- startX = 0

- startY = 0

- endX = 0

- endY = 0

- bs = 0

- bs2 = 0

- HuaDongJianGe = time.time()

- siot.init(client_id="7134840160197196",server="10.1.2.3",port=1883,user="siot",password="dfrobot")

-

- siot.connect()

- siot.loop()

- u_gui.on_mouse_move(mouse_move)

-

-

-

- def distance(point1, point2):

- return math.sqrt((point2.x - point1.x)**2 + (point2.y - point1.y)**2)

- # 定义一个函数来判断手势

- def determine_gesture(landmark):

- # 定义拳头和手掌张开的阈值

- fist_threshold = 1 # 拳头的阈值,较小的距离表示拳头

- open_hand_threshold = 1.5 # 手掌张开的阈值,较大的距离表示手掌张开

-

- # 获取拇指尖和手腕之间的距离

- middle_FINGER_TIP = landmark.landmark[mp_hands.HandLandmark.MIDDLE_FINGER_TIP]

- middle_FINGER_MCP = landmark.landmark[mp_hands.HandLandmark.MIDDLE_FINGER_MCP]

- wrist = landmark.landmark[mp_hands.HandLandmark.WRIST]

- d1 = distance(middle_FINGER_TIP, wrist)

- d2 = distance(middle_FINGER_MCP, wrist)

- d=d1/d2

- print(d1/d2)

- # 根据距离判断手势

-

- if d < fist_threshold:

- return "Fist"

- elif d > open_hand_threshold:

- return "Open Hand"

- else:

- return "Unknown"

-

- # 打开摄像头

- cap = cv2.VideoCapture(0)

-

- while cap.isOpened():

- success, image = cap.read()

-

- if not success:

- continue

- image = cv2.resize(image,( 240, 320))

-

- # 将BGR图像转换为RGB

- image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

- image = cv2.flip(image, 1)

-

- # 处理图像并获取手部关键点

- results = hands.process(image)

-

- # 绘制手部关键点和连接线

- image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

- if results.multi_hand_landmarks:

- for hand_landmarks in results.multi_hand_landmarks:

- mp_drawing.draw_landmarks(image, hand_landmarks, mp_hands.HAND_CONNECTIONS)

- # 判断手势

- gesture = determine_gesture(hand_landmarks)

- print(gesture)

- if gesture=="Fist":

- if bs2==0:

- bs2=1

- jietu()

- qingkong()

- u_audio.play("ding.mp3")

- else:

- bs2=0

- #cv2.putText(image, gesture, (50, 50), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255), 2)

-

- # 显示图像

- #cv2.imshow("Image", image)

-

- # 按'q'退出

- #if cv2.waitKey(1) & 0xFF == ord('q'):

- ##break

-

- # 释放资源

- cap.release()

- #cv2.destroyAllWindows()

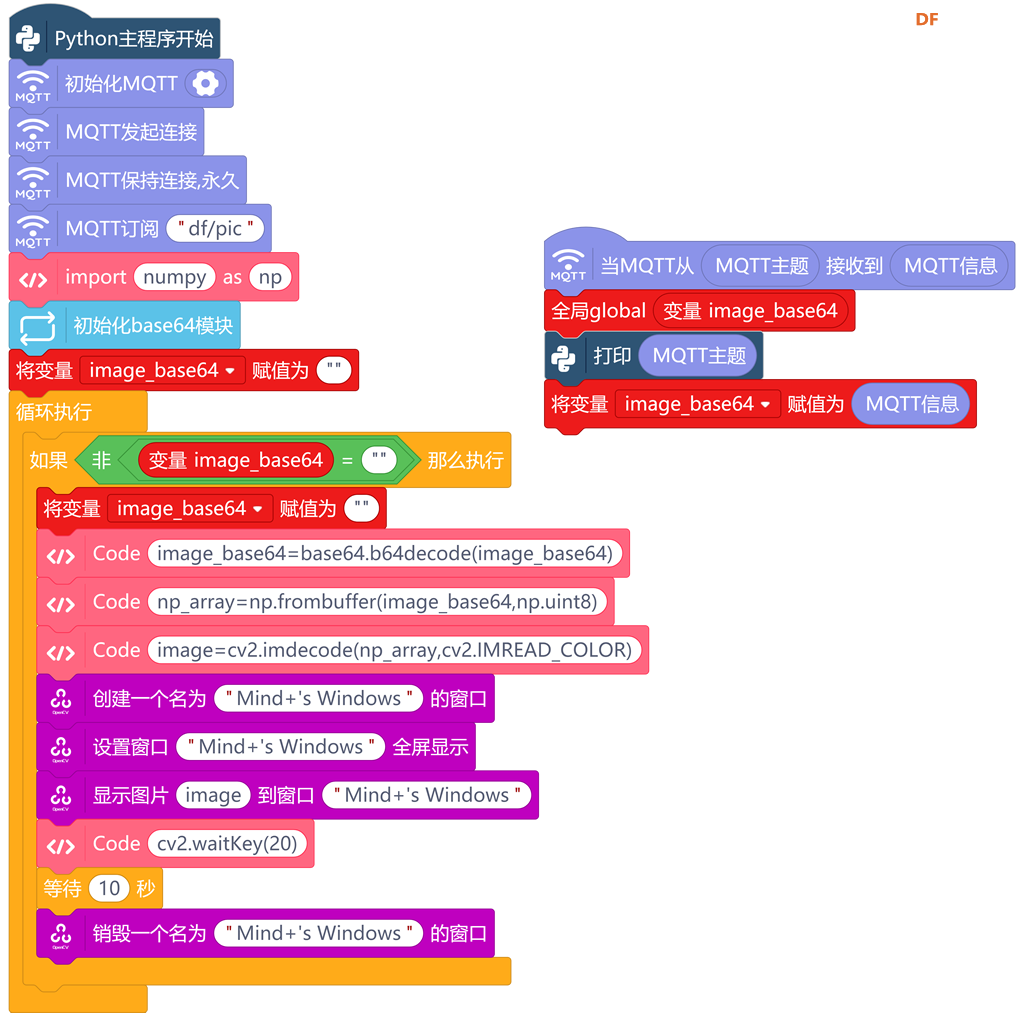

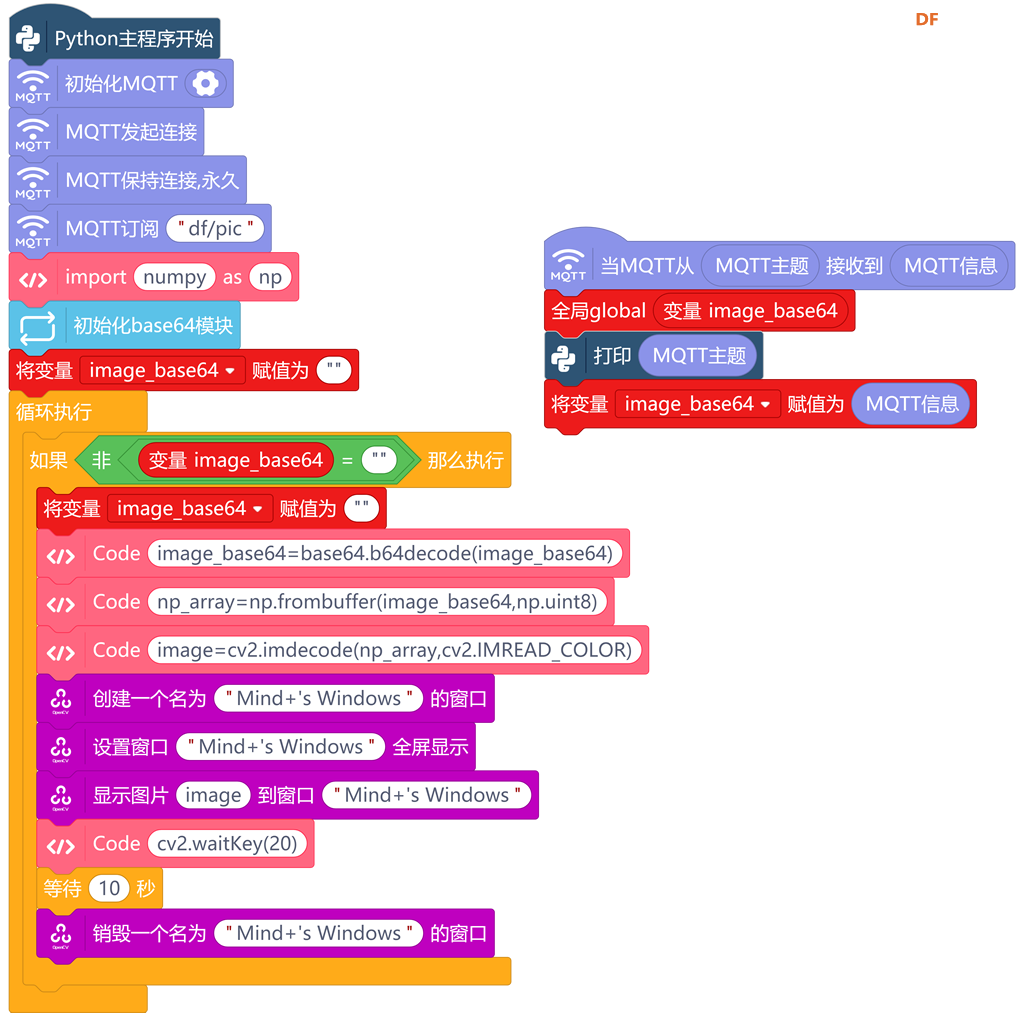

3.接收截图

4.手势(手掌张开)接收程序

- import cv2

- import mediapipe as mp

- import math

- import sys

- import siot

- import time

- import pyautogui

- import numpy as np

- from unihiker import GUI

- import base64

- from io import BytesIO

- from PIL import Image

- from unihiker import Audio

-

- sys.path.append("/root/mindplus/.lib/thirdExtension/nick-base64-thirdex")

- def frame2base64(frame):

- frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

- img = Image.fromarray(frame) #将每一帧转为Image

- output_buffer = BytesIO() #创建一个BytesIO

- img.save(output_buffer, format='JPEG') #写入output_buffer

- byte_data = output_buffer.getvalue() #在内存中读取

- base64_data = base64.b64encode(byte_data) #转为BASE64

- return base64_data #转码成功 返回base64编码

-

- def base642base64(frame):

- data=str('data:image/png;base64,')

- base64data = str(frame2base64(frame))

- framedata = base64data[2:(len(base64data)-1)]

- base642base64_data = data + str(framedata)

- return base642base64_data

-

- # 事件回调函数

- def mouse_move(x,y):

- global HuaDongJianGe

- global startX, startY,endX, endY,bs,img

- global 画图

- print((str(startX) + str((str(":") + str((str(startY) + str((str(" ") + str((str(endX) + str((str(":") + str(endY)))))))))))))

- if ((time.time() - HuaDongJianGe) > 0.5):

- bs = 0

- HuaDongJianGe = time.time()

- if (bs == 0):

- startX = x

- startY = y

- endX = x

- endY = y

- bs = 1

- 画点=u_gui.fill_circle(x=x,y=y,r=2,color="#0000FF")

- 画图=u_gui.draw_line(x0=startX,y0=startY,x1=endX,y1=endY,width=5,color="#0000FF")

- else:

- startX = endX

- startY = endY

- endX = x

- endY = y

- 画点=u_gui.fill_circle(x=x,y=y,r=2,color="#0000FF")

- 画图=u_gui.draw_line(x0=startX,y0=startY,x1=endX,y1=endY,width=5,color="#0000FF")

- def qingkong():

- u_gui.clear()

- def jietu():

- screenshot = pyautogui.screenshot()

- screenshot.save('screenshot.png')

- img = cv2.imread("screenshot.png", cv2.IMREAD_UNCHANGED)

-

- siot.publish(topic="df/pic", data=str(base642base64(img)))

-

- mp_hands = mp.solutions.hands

- hands = mp_hands.Hands(static_image_mode=False, max_num_hands=1, min_detection_confidence=0.5, min_tracking_confidence=0.5)

- mp_drawing = mp.solutions.drawing_utils

- u_audio = Audio()

- u_gui=GUI()

- u_gui.on_mouse_move(mouse_move)

- startX = 0

- startY = 0

- endX = 0

- endY = 0

- bs = 0

- bs2 = 0

- HuaDongJianGe = time.time()

- siot.init(client_id="7134840160197196",server="10.1.2.3",port=1883,user="siot",password="dfrobot")

- siot.connect()

- siot.loop()

- u_gui.on_mouse_move(mouse_move)

-

- def distance(point1, point2):

- return math.sqrt((point2.x - point1.x)**2 + (point2.y - point1.y)**2)

- # 定义一个函数来判断手势

- def determine_gesture(landmark):

- # 定义拳头和手掌张开的阈值

- fist_threshold = 1 # 拳头的阈值,较小的距离表示拳头

- open_hand_threshold = 1.5 # 手掌张开的阈值,较大的距离表示手掌张开

-

- # 获取拇指尖和手腕之间的距离

- middle_FINGER_TIP = landmark.landmark[mp_hands.HandLandmark.MIDDLE_FINGER_TIP]

- middle_FINGER_MCP = landmark.landmark[mp_hands.HandLandmark.MIDDLE_FINGER_MCP]

- wrist = landmark.landmark[mp_hands.HandLandmark.WRIST]

- d1 = distance(middle_FINGER_TIP, wrist)

- d2 = distance(middle_FINGER_MCP, wrist)

- d=d1/d2

- print(d1/d2)

- # 根据距离判断手势

-

- if d < fist_threshold:

- return "Fist"

- elif d > open_hand_threshold:

- return "Open Hand"

- else:

- return "Unknown"

-

- # 打开摄像头

- cap = cv2.VideoCapture(0)

-

- while cap.isOpened():

- success, image = cap.read()

-

- if not success:

- continue

- image = cv2.resize(image,( 240, 320))

-

- # 将BGR图像转换为RGB

- image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

- image = cv2.flip(image, 1)

-

- # 处理图像并获取手部关键点

- results = hands.process(image)

-

- # 绘制手部关键点和连接线

- image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

- if results.multi_hand_landmarks:

- for hand_landmarks in results.multi_hand_landmarks:

- mp_drawing.draw_landmarks(image, hand_landmarks, mp_hands.HAND_CONNECTIONS)

- # 判断手势

- gesture = determine_gesture(hand_landmarks)

- print(gesture)

- if gesture=="Fist":

- if bs2==0:

- bs2=1

- jietu()

- qingkong()

- u_audio.play("ding.mp3")

- else:

- bs2=0

- #cv2.putText(image, gesture, (50, 50), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255), 2)

-

- # 显示图像

- #cv2.imshow("Image", image)

-

- # 按'q'退出

- #if cv2.waitKey(1) & 0xFF == ord('q'):

- ##break

-

- # 释放资源

- cap.release()

- #cv2.destroyAllWindows()

【演示视频】

|

沪公网安备31011502402448

沪公网安备31011502402448