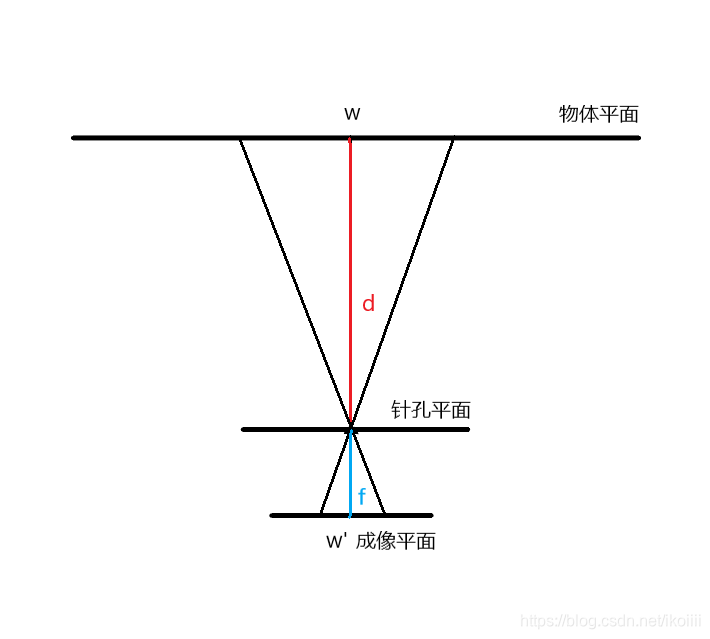

中间通过红蓝的垂线是相机的主光轴,d是被测物体至镜头的距离,f为相机镜头的焦距,w为被测物体的实际宽度(高度),w'为物体在成像平面(感光元件)上的宽度(高度)。

- import cv2

- import math

- import numpy as np

-

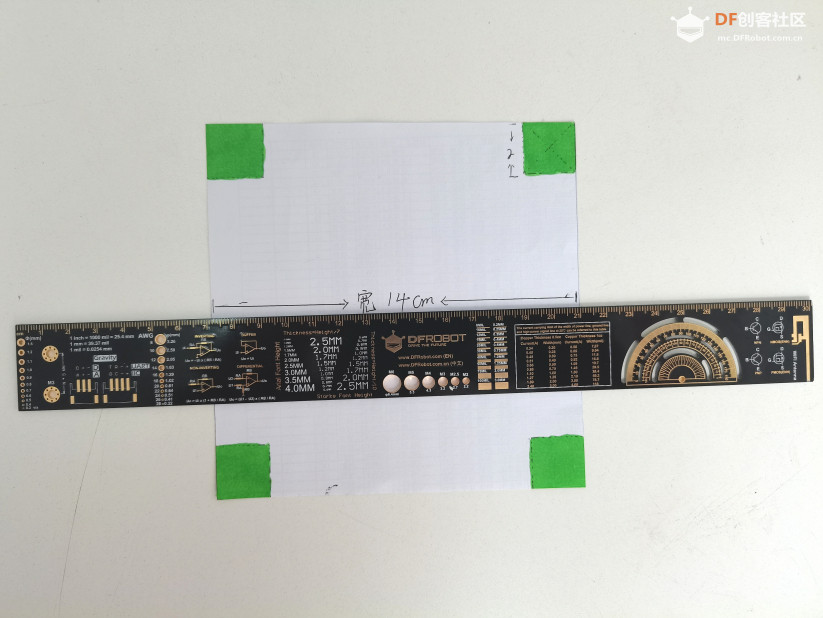

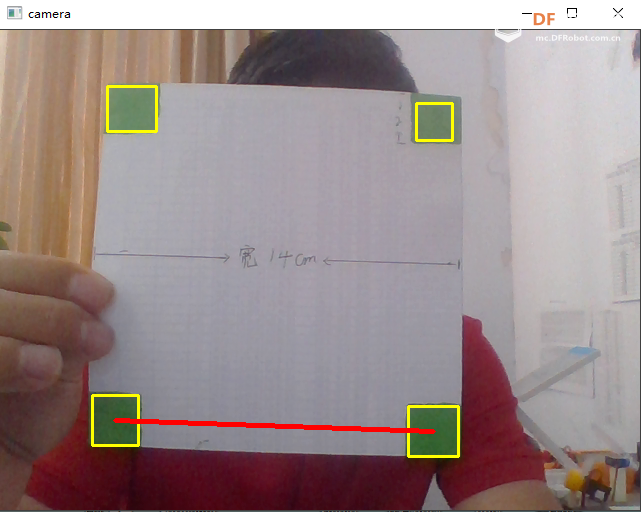

- ball_color = 'green'

- boxs=[]

- color_dist = {'red': {'Lower': np.array([0, 60, 60]), 'Upper': np.array([6, 255, 255])},

- 'blue': {'Lower': np.array([100, 80, 46]), 'Upper': np.array([124, 255, 255])},

- 'green': {'Lower': np.array([35, 43, 35]), 'Upper': np.array([90, 255, 255])},

- }

-

- cap = cv2.VideoCapture(0)

- cv2.namedWindow('camera', cv2.WINDOW_AUTOSIZE)

-

- while cap.isOpened():

- ret, frame = cap.read()

- if ret:

- if frame is not None:</p><p> #图像处理</p><p> gs_frame = cv2.GaussianBlur(frame, (5, 5), 0) # 高斯模糊

- hsv = cv2.cvtColor(gs_frame, cv2.COLOR_BGR2HSV) # 转化成HSV图像

- erode_hsv = cv2.erode(hsv, None, iterations=2) # 腐蚀 粗的变细,用于去除噪声点

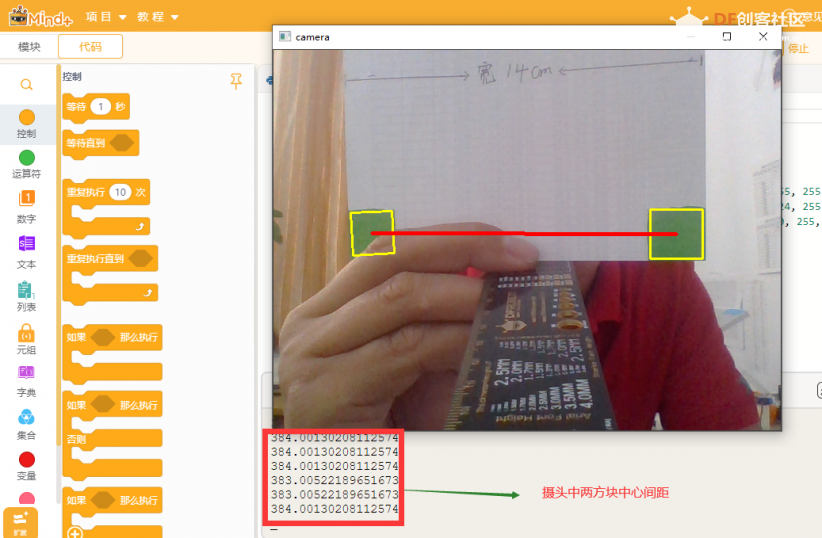

- inRange_hsv = cv2.inRange(erode_hsv, color_dist[ball_color]['Lower'], color_dist[ball_color]['Upper']) #去除背景部分,将绿色以外的其他部分去除掉,并将图像转化为二值化图像</p><p> #绘制矩形边框

- cnts = cv2.findContours(inRange_hsv.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)[-2] #使用该函数找出方框外边界,并存储在cnts中

- k=1

- if cnts:

- #找到两个相邻的方块,并计算中心间距

- for i in cnts:

-

- rect = cv2.minAreaRect(i)

- box = cv2.boxPoints(rect)

- if k==1:

- boxs1=box

- if k==2:

- boxs2=box

- k=k+1

- cv2.drawContours(frame, [np.int0(box)], -1, (0, 255, 255), 2) #在原图像上将分析出的矩形边界绘制出来

-

- if k>2:

- x1=int((boxs1[0][0]+boxs1[1][0]+boxs1[2][0]+boxs1[3][0])/4)

- y1=int((boxs1[0][1]+boxs1[1][1]+boxs1[2][1]+boxs1[3][1])/4)

- x2=int((boxs2[0][0]+boxs2[1][0]+boxs2[2][0]+boxs2[3][0])/4)

- y2=int((boxs2[0][1]+boxs2[1][1]+boxs2[2][1]+boxs2[3][1])/4)

-

- cv2.line(frame,(x1,y1),(x2,y2), (0, 0, 255), 4,8) #在原图像上将找到的相邻方块的中心点连线

- lenght=math.sqrt((x1-x2)**2+(y1-y2)**2)

- print(lenght)

- cv2.imshow('camera', frame)

- cv2.waitKey(1)

- else:

- print("无画面")

- else:

- print("无法读取摄像头!")

-

- cap.release()

- cv2.waitKey(0)

- cv2.destroyAllWindows()

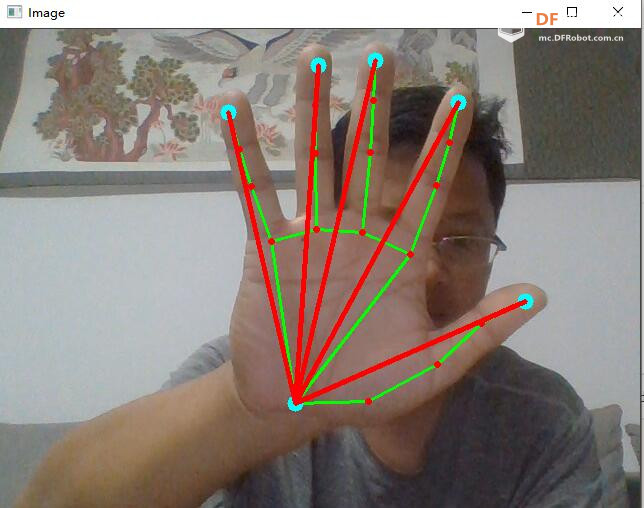

【手距控灯】

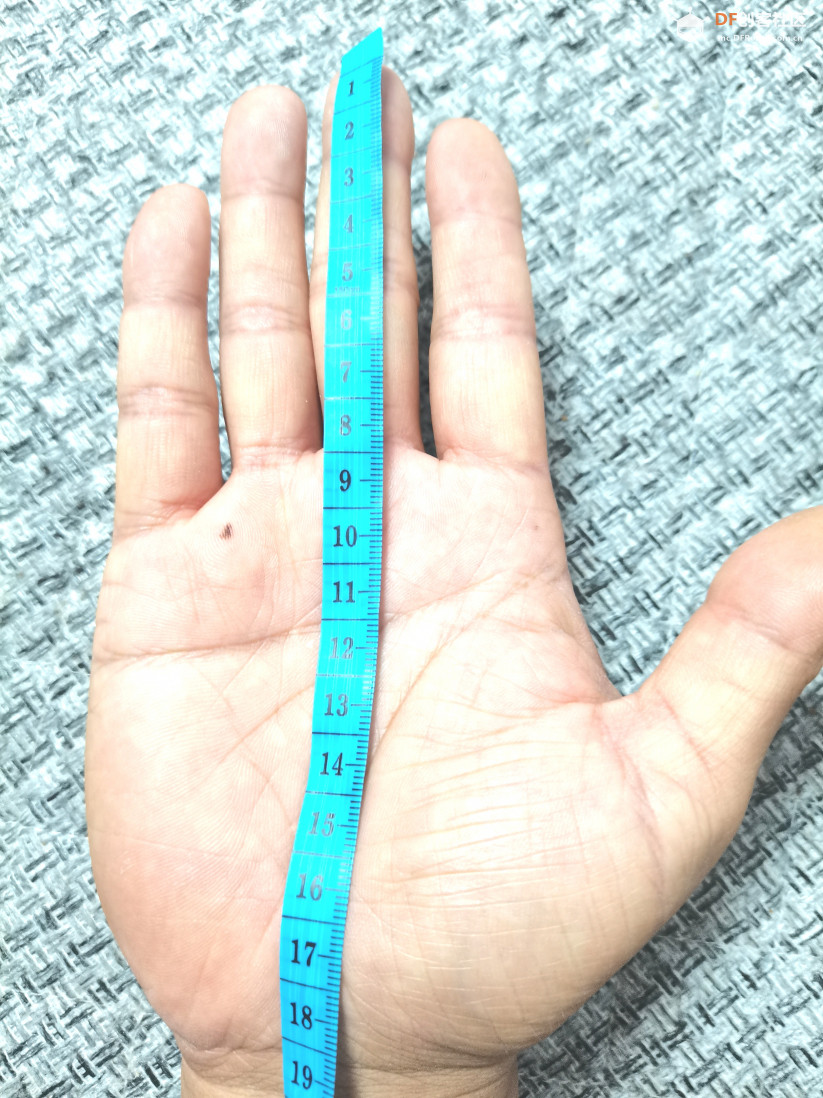

测量实际中指到腕关节的距离,估测为18cm

-

- import cv2

- import math

- from handutil import HandDetector

- import numpy as np

- import time

- from pinpong.board import Board,Pin,NeoPixel #导入neopixel类

- Board("uno").begin() #初始化,选择板型(uno、leonardo、xugu)和端口号,不输入端口号则进行自动识别

- NEOPIXEL_PIN = Pin(Pin.D7)

- PIXELS_NUM = 7 #灯数

- np = NeoPixel(NEOPIXEL_PIN,PIXELS_NUM)

- # 打开摄像头

- cap = cv2.VideoCapture(0)

- # 创建一个手势识别对象

- detector = HandDetector()

-

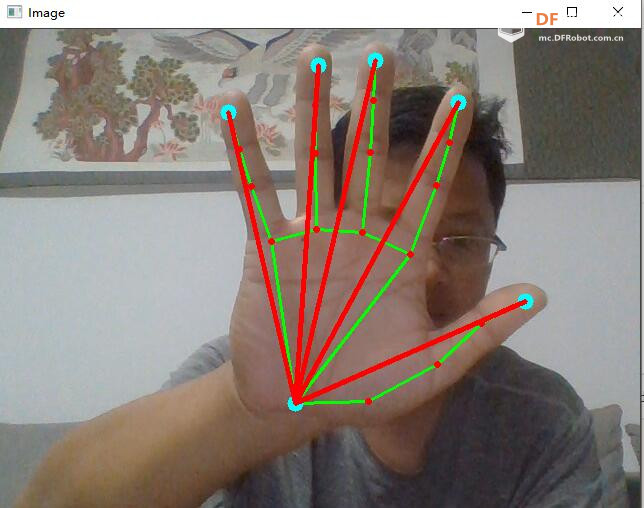

- # 指尖列表,分别代表腕关节,大拇指、食指、中指、无名指和小指的指尖

- tip_ids = [0,4, 8, 12, 16, 20]

- lenght=0

- num=0

- while True:

- success, img = cap.read()

-

- if success:

- # 检测手势

- img = detector.find_hands(img, draw=True)

- # 获取手势数据

- lmslist = detector.find_positions(img)

- if len(lmslist) > 0:

- fingersX = []

- fingersY = []

- for tid in tip_ids:

- # 找到每个指尖的位置

- x, y = lmslist[tid][1], lmslist[tid][2]

- cv2.circle(img, (x, y), 8, (255, 255, 0), cv2.FILLED)

-

- if tid == 0:

- fingersX.append(x)

- fingersY.append(y)

-

- if tid == 4:

- fingersX.append(x)

- fingersY.append(y)

-

- if tid == 8:

- fingersX.append(x)

- fingersY.append(y)

-

- if tid == 12:

- fingersX.append(x)

- fingersY.append(y)

-

- if tid == 16:

- fingersX.append(x)

- fingersY.append(y)

-

- if tid == 20:

- fingersX.append(x)

- fingersY.append(y)

- #计算图像中指到腕关节的距离,并求和

-

- lenght=math.sqrt((fingersX[0]-fingersX[3])**2+(fingersY[0]-fingersY[3])**2)

- #18为实际中指到腕关节的距离

- lenght=640/lenght*18

- for num in range(0,int(lenght/80*7)):

- # 找到对应的手势图片并显示

- if num>6:

- num=6

- np[num] = (255, 0 ,0)

- if num<6:

- for k in range(num,7):

- np[k] = (0, 0 ,0)

- for i in range(1,6):

- cv2.line(img, (fingersX[0], fingersY[0]), (fingersX[i], fingersY[i]), (0, 0, 255), 4,8)

- print(lenght)

-

- cv2.imshow('Image', img)

-

- k = cv2.waitKey(1)

- if k == ord('q'):

- break

-

- cap.release()

- cv2.destroyAllWindows()

沪公网安备31011502402448

沪公网安备31011502402448